Whatever their policy merits, safety limitations on AI development generally do not raise First Amendment issues.

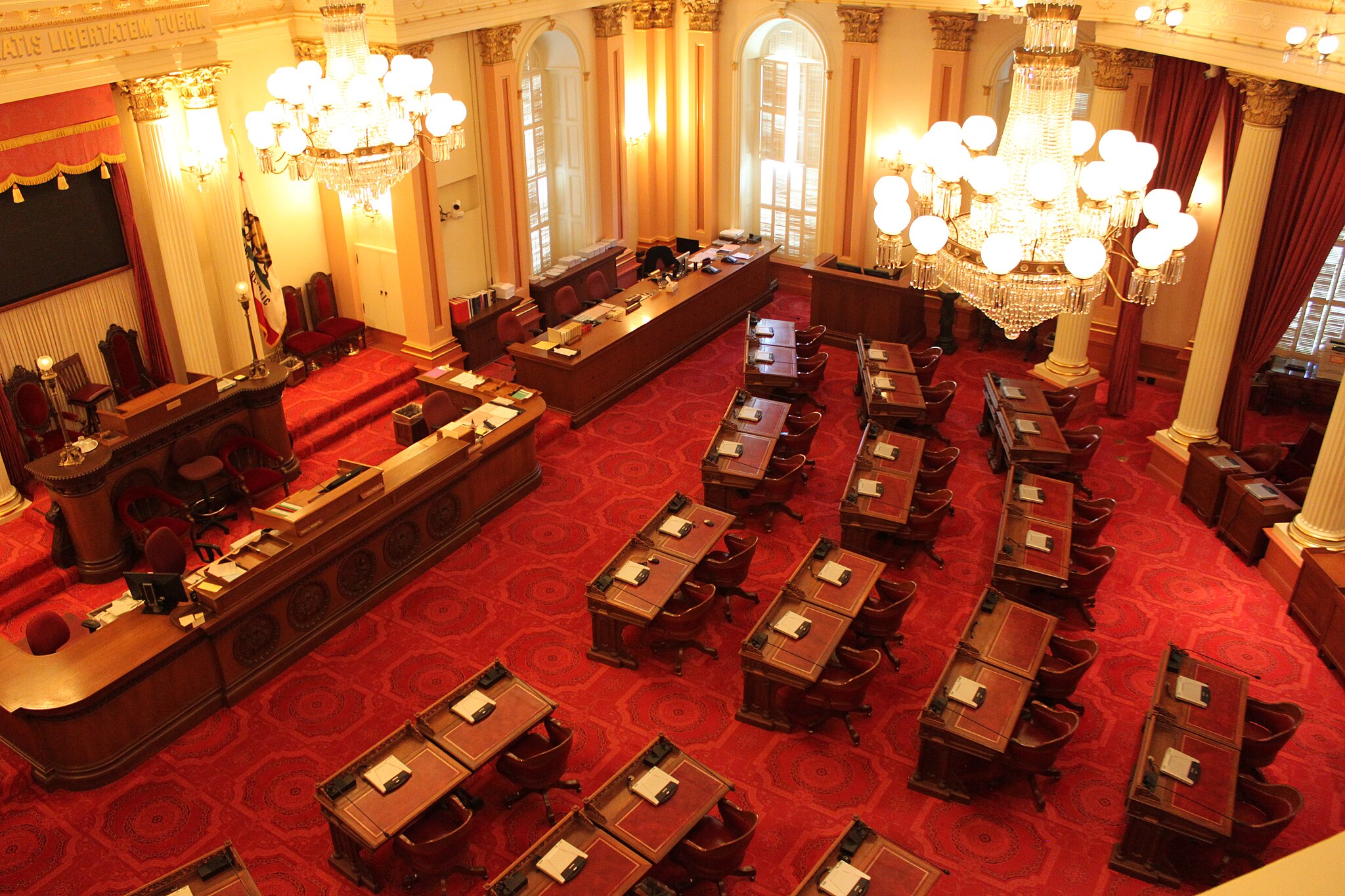

Last month, the California senate passed Senate Bill 1047, the Safe and Secure Innovation for Frontier Artificial Intelligence Models Act. It looks like it may soon become law.

If enacted, the bill would impose various safety requirements on the developers of new frontier artificial intelligence (AI) models. The bill’s progress through the legislative process has, predictably, triggered various policy and legal critiques. On policy, critics contend that the bill overregulates (though some worry that it doesn’t go far enough), that it could stifle innovation, and that it could squash competition in the AI industry. On law, critics have argued that safety regulations like SB 1047 violate the First Amendment.

We disagree among ourselves about the policy merits of SB 1047. But we agree that the First Amendment criticism is quite weak.

Critics have two main First Amendment theories. The first is that SB 1047 makes it more difficult for AI companies to freely distribute the “weights” of their models. (Model weights are the numerical parameters that define how a generative AI model creates its output.) Model weights, the theory goes, are in some ways analogous to the code underlying other kinds of software. Code, the critics say (often citing the famous Bernstein line of cases from the 1990s and their progeny), is speech. And thus, the argument goes, forbidding the dissemination of code is censorship of speech.

The second theory relies on the idea that the outputs of models are themselves protected speech. SB 1047 would, for example, forbid the release of a frontier model that could be easily induced to output detailed instructions for making a bioweapon. Critics argue that such restrictions on what an AI model may “say” are unconstitutional limitations on expression.

These are not strong legal arguments, at least not in the very broad way critics have made them. Yes, some kinds of code for some kinds of software are forms of programmer expression. And some kinds of outputs of some kinds of software are the protected speech of the software’s creator or user. But not all kinds. And straightforward features of frontier AI models make regulating them for safety purposes extremely unlikely to interfere with protected speech.

The reasons can be stated simply. Model weights will almost never be the expression of any human being. No human writes them: They are created through vast machine learning operations. No human can even read them: It took a group of the world’s leading experts of AI interpretability months, and large computational resources, to even begin unpacking the inner workings of a current medium-sized model. Thus, humans have virtually no meaningful influence over or understanding of what model weights might communicate in their raw form. In general, code like that—including the “machine code” of traditional software—is not well understood as human expression. Such materials fail to meet the Supreme Court’s definition of communicative conduct: They are not designed “to convey a particularized message,” nor is the likelihood great that any such message “would be understood” by those who view them.

As to the AI system’s outputs, these are by design not the expressions of any human being—neither of an AI system’s creators nor its users. Some software is designed so that its outputs will convey something in particular from the mind of the creator or user to some audience. Think, for example, of a narrative video game.

But frontier AI systems are designed to do the exact opposite. They are set up not to “say” something in particular but, rather, to be able to say essentially everything, including innumerable things that neither the creator nor the user conceives or endorses. ChatGPT can give you an accurate description of the mating behavior of the western corn rootworm, a topic on which OpenAI’s engineers are almost certainly ignorant. ChatGPT can tell you that, in its view, the best Beatle is Paul, an opinion that you, the user, and everyone at OpenAI might reject. Such outputs are not well understood as communications from any human to any other human. Indeed, model creators often go to great lengths to convey this fact. This is the whole point of large language models—to be conversational engines whose range extends far beyond the limits of their creators and users’ own knowledge, beliefs, and intentions. The same point applies, of course, to other generative models, like video generators or natively multimodal models.

We do not want to overstate our case. Our view is not that AI systems, their weights, and their outputs have no First Amendment dimension at all, such that no law regulating them could be unconstitutional. We agree, for example, that a law forbidding AI systems to critique the government would be rightfully struck down. The mistake, however, is in thinking of the weights and outputs of AI systems as necessarily forms of human expression. General AI systems are instead better understood as tools for composing, transmitting, or refining human expression—or for entirely non-expressive purposes, like designing proteins or predicting biological interactions. The First Amendment affords tools for expression some protections, but they are weaker than its protections for speech itself.

We think that, under the correct First Amendment standards, SB 1047 and similar laws should be upheld. SB 1047 requires developers of frontier models—those that are trained using very large amounts of compute, or that have capabilities equivalent to models trained with such compute—to take one of two approaches to AI safety. One option is to certify that the developer has “reasonably exclud[ed] the possibility” that the model has certain extremely hazardous capabilities, like enabling the creation of biological weapons capable of causing mass casualties. If the developer cannot certify that the model is incapable of such acts, it must instead implement various precautions to prevent users from using the model in such dangerous ways. Because such requirements likely cannot be met with a model whose weights have been released openly, the bill does restrict some open sourcing. But, crucially, it does so only when the developer cannot certify that the model is safe. And not perfectly safe—just safe enough that it cannot reasonably be expected to help cause mass casualty events or similarly grievous harm.

Such a bill does not unlawfully restrict expression. The first restriction regulates the transmission of model weights because of what they do—enabling dangerous capabilities—not because of what they express (which, as we’ve argued, is generally nothing). This is the type of restriction that has been repeatedly upheld even as to source code, which is far more expressive than opaque model weights.

The second restriction regulates either model weights (by preventing the creation and distribution of a model with hazardous capabilities) or model outputs (by requiring safeguards to prevent a model from harming others with hazardous capabilities). Here, too, the bill would pass First Amendment muster. AI output is generally not protected human expression, and so the First Amendment concern with regulating output is either to prevent overly onerous restrictions on tools for expression, or to protect the rights of listeners. In such contexts, the Supreme Court applies a deferential form of constitutional review. Often, it is intermediate scrutiny, which requires that a restriction “furthers an important or substantial governmental interest,” which “is unrelated to the suppression of free expression,” and that “the incidental restriction on alleged First Amendment freedoms [be] no greater than is essential to the furtherance of that interest.”

Avoiding the catastrophes contemplated in SB 1047 is undoubtedly a substantial government interest; the Court has held a broad range of government objectives to be substantial, including maintaining access to television broadcasting and preventing petty crime. And the bill, which focuses specifically on preventing the harmful exercise of hazardous capabilities, is sufficiently tailored to its legitimate ends. According to the Court, a regulation “need not be the least speech-restrictive means of advancing the Government’s interests” to be upheld.

We could imagine alternate versions of SB 1047—or versions of the implementing regulations that California will write once the bill is passed—which might fail this permissive test. Suppose, for example, that California implemented a blanket policy of rejecting developers’ safety certifications without reading them. Or suppose it purposely set safety standards impossibly high, with the intent of ensuring that they would never be satisfied. Such rules would not be well tailored to the law’s legitimate purpose. But these are extreme cases. Nothing in SB 1047’s text has this character, and there is currently no reason to think that California’s regulators would implement it in such a way.

Note that these extreme versions of the regulations would not be unconstitutional simply because they might prevent the release of frontier AI models. To understand this, imagine that California writes a regulation implementing SB 1047, which requires that safety certifications involve “red teaming.” There, safety experts would use creative prompting to try to induce a frontier model to, for example, design a novel bioweapon. If the model complied and successfully designed such a bioweapon, it would fail the test. The model developer might not be able to stop the model from complying with such requests—indeed, there is no reliable method known today to prevent users from circumventing model safeguards. Then, the developer would have to either implement controls sufficient to prevent users from making such requests or shelve the model until significant safety advances are made. In our view, none of this would violate the First Amendment. Nor, for the same reasons, would a “burn ban” implemented during a dry year in a wildfire-prone state be unconstitutional, even if that meant that protesters could burn no effigies for a time.

One last point: Even if AI model weights or output are held to have some expressive component, we are skeptical that the First Amendment would stand in the way of safety rules like those advanced in SB 1047. For one thing, courts also apply intermediate scrutiny to functional regulations of conduct that have both expressive and functional elements, like source code used to evade copyright law. For another, the Supreme Court has long permitted restrictions on speech that it considers of low social value or very dangerous, like speech integral to criminal conduct, or speech materially assisting foreign terrorists. The type of hazardous capabilities regulated by SB 1047 would almost certainly fall into one of those categories.

To emphasize, we take no position in this piece (in part because we don’t agree among ourselves) on whether SB 1047 is good policy. But we agree that that’s what the debate should be about—not whether it can be enacted under the First Amendment.

– Peter N. Salib, Doni Bloomfield, Alan Z. Rozenshtein, Published courtesy of Lawfare.