A summary of the Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.

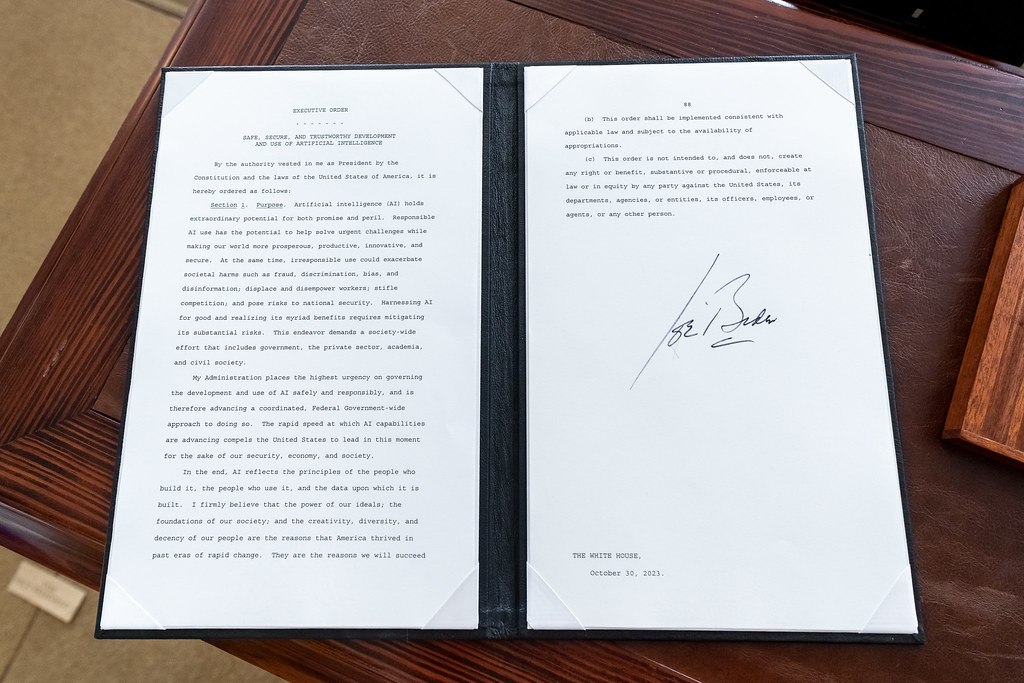

On Oct. 30, 2023, President Biden signed the Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence. It mandates that large artificial intelligence (AI) developers and cloud providers share their safety tests and other critical information with the U.S. government and directs agencies to establish safety and testing standards while calling for action on various fronts, including cybersecurity, civil rights, and labor market impacts. The order follows several of President Biden’s previous actions on AI, including the Blueprint for an AI Bill of Rights, voluntary commitments from leading AI companies, and the National Institute of Standards and Technology’s (NIST’s) AI Risk Management Framework.

Section 1: Purpose

Section 1 of the executive order emphasizes the significant potential of artificial intelligence to address challenges and contribute to prosperity and security while acknowledging potential risks, including irresponsible use that could exacerbate societal harms such as fraud, bias, and disinformation, and the potential to displace and disempower workers, stifle competition, and pose national security threats. This section also stresses the importance of a collaborative, society-wide effort involving government, the private sector, academia, and civil society to harness AI’s benefits while mitigating risks.

The order asserts that the administration is committed to leading the safe and responsible development and use of AI, highlighting the urgency of developing safe AI use due to the rapid advancement of AI capabilities. This leadership is deemed essential for the security, economy, and society of the United States.

Section 2: Policy and Principles

Section 2 outlines the Biden administration’s policy to govern AI development and use according to eight guiding principles and priorities. These principles state that AI must:

(a) Be safe and secure, requiring robust, repeatable, and reliable standardized AI system evaluations, policies, institutions, and mechanisms to mitigate risks before use. These steps address the most pressing AI risks in biotechnology, cybersecurity, critical infrastructure, and other national security dangers. Testing, evaluations, postdeployment performance tests, effective labeling, and provenance measures will help provide a strong foundation for addressing AI’s risks without sacrificing its benefits.

(b) Promote responsible innovation and competition to allow the United States to lead in AI.

(c) Support American workers by creating new jobs and industries, and incorporate collective bargaining to ensure workers benefit from these opportunities. Provide job training and education to support a diverse workforce, and ensure that the deployment of AI does not “undermine rights, worsen job quality, encourage undue worker surveillance, lessen market competition, introduce new health and safety risks, or cause harmful labor-force disruptions.”

(d) Explore how AI might unlawfully discriminate in or facilitate the administration of federal programs and benefits to promote equity and civil rights.

(e) Protect consumer interests by enforcing existing consumer protection laws and enacting safeguards against fraud, unintended bias, discrimination, privacy infringements, and other potential harms from AI.

(f) Safeguard privacy and civil liberties by ensuring that data collection, use, and retention is lawful and secure and mitigates risks of privacy and confidentiality.

(g) Manage risks from the federal government’s use of AI and bolster its internal capacity to regulate, govern, and support the responsible use of AI.

(h) Lead global societal, economic, and technological progress, including effective leadership in pioneering systems and safeguards for responsible technological deployment. This action includes engagement with global allies and partners to develop a framework for mitigating AI risks, unlocking AI’s potential for good, and uniting on shared challenges.

Section 3: Definitions

Section 3 defines terms used throughout the executive order, critical to its interpretation and implementation. For example, artificial intelligence is defined as “a machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations, or decisions influencing real or virtual environments.” AI is a component of an AI system, which is “any data system, software, hardware, application, tool, or utility that operates in whole or in part using AI.” Machine learning means “a set of techniques that can be used to train AI algorithms to improve performance at a task based on data.”

Section 4: Ensuring the Safety and Security of AI Technology

Section 4, the longest section of the executive order, has eight subsections focused on AI safety and security. It orders the creation of guidelines, standards, and best practices to promote AI safety and security (section 4.1) and then sets forth measures for ensuring safe and reliable AI (4.2). It addresses anticipated challenges of deploying AI, including managing AI in critical infrastructure and cybersecurity (4.3) and reducing various types of risk (4.4 and 4.5). It also looks forward to the continued development of AI, including the risks and benefits of widely available dual-use foundation models (4.6), the use of federal data for AI training (4.7), and the need to comprehensively evaluate the national security implications of AI by developing a National Security Memorandum (4.8).

4.1 Developing Guidelines, Standards, and Best Practices for AI Safety and Security

Section 4.1 of the executive order requires the secretary of commerce, through the NIST director and in collaboration with the secretary of energy and the secretary of homeland security, to formulate guidelines for creating safe and secure AI, focusing on generative AI and dual-use foundation models within 270 days of the order. This directive calls for developing resources aligned with the NIST AI Risk Management Framework to secure development practices. It also necessitates establishing a framework for AI red-teaming, defined as structured testing efforts to identify flaws and vulnerabilities in AI systems, typically conducted in a controlled environment in collaboration with AI developers, and the initiation of a government initiative to set benchmarks for evaluating and auditing AI systems, with a specific focus on cybersecurity and biosecurity risks. In parallel, subsection 4.1 orders the secretary of commerce to work with the secretary of energy and the National Science Foundation (NSF) to support the development and availability of testbeds for safe AI technologies. The secretary of energy is to develop tools and testbeds for evaluating AI models against threats to nuclear, nonproliferation, and other security domains, integrating efforts with various stakeholders and utilizing existing solutions where available.

4.2 Ensuring Safe and Reliable AI

Section 4.2 mandates the secretary of commerce to implement measures that secure the development and deployment of artificial intelligence, especially concerning national defense and critical infrastructure, per the Defense Production Act. Within 90 days of the order, the secretary of commerce must mandate reporting from two key groups to ensure the continuous availability of safe, reliable, and effective AI, particularly for national defense and critical infrastructure protection. First, companies developing or intending to develop potential dual-use foundation AI models must regularly provide detailed information. This requirement includes their activities related to training, developing, or producing such models, measures for safeguarding the integrity of these processes against sophisticated threats, ownership details of model weights along with their protection strategies, and results from any AI red-team tests (structured tests to find system flaws) conducted on these models, with descriptions of safety measures implemented.

Second, entities acquiring, developing, or possessing potential large-scale computing clusters must report their activities. This requirement includes disclosing the existence and locations of these clusters and detailing their total computing power. These reporting requirements aim to verify and ensure AI’s reliability and safety, especially concerning its use in critical national areas like cybersecurity and biosecurity.

Companies developing potential dual-use foundation models must report the security measures they used to protect training processes, model weights, and relevant red-team testing results. Dual-use foundation models are models trained on broad data using self-supervision that contain tens of billions of parameters, apply to various contexts, and exhibit high-performance levels on tasks that pose security risks. Security risks include lowering the barrier to entry for non-experts to develop or acquire chemical, biological, radiological, and nuclear (CBRN) weapons, enabling powerful cyber operations, and allowing AI to evade oversight. Anyone operating a potential large-scale computing cluster must report to the government with the location of their clusters and total available computing power.

The order requires the secretary of commerce to draft regulations requiring U.S. Infrastructure as a Service (IaaS) providers to report any use of their services by foreign entities for AI training that could enable these activities. This reporting must identify foreign clients and details about the AI model training they undertake. Furthermore, U.S. IaaS providers must ensure that foreign resellers report such activities. The secretary of commerce will also establish technical conditions for AI models that have the potential for malicious use.

Furthermore, within 180 days, the secretary of commerce must draft regulations that mandate IaaS providers to verify the identities of foreign persons obtaining IaaS accounts via resellers and establish minimum standards for verification and recordkeeping. Additionally, the order authorizes the secretary of commerce to enact rules and regulations and take other necessary actions to enforce these provisions, using the authority granted by the International Emergency Economic Powers Act.

4.3 Managing AI in Critical Infrastructure and in Cybersecurity

Section 4.3 mandates a robust integration of AI within critical infrastructure to enhance cybersecurity. According to the order, agency heads with regulatory authority over critical infrastructure are to evaluate AI’s potential risks and report to the secretary of homeland security within 90 days. From then on, agency heads are required to conduct annual reviews, which are also to be submitted to the Department of Homeland Security. What’s more, the secretary of the treasury will release a public report outlining best practices for financial institutions to handle AI cybersecurity risks within 150 days. By 180 days, the secretary of homeland security will integrate the AI Risk Management Framework into safety protocols in collaboration with the secretary of commerce.

Subsequently, within 240 days of finalizing these guidelines, the assistant to the president for national security affairs and the director of the Office of Management and Budget will coordinate with agencies to consider mandating these requirements through regulatory actions such as guidelines enforcement or other appropriate measures, with independent regulatory agencies encouraged to participate in their respective spheres. Furthermore, the secretary of homeland security will establish an AI Safety and Security Board—composed of AI experts from the private sector, academia, and government—as an advisory committee to offer expertise and recommendations for improving AI security in critical infrastructure. The secretaries of defense and homeland security will conduct pilot projects using AI, including large-language models, to identify and rectify vulnerabilities in government systems within 180 days. These projects are to be followed by a detailed report of their findings and lessons learned, which is to be sent to the assistant to the president for national security affairs after 270 days from the date of this order.

4.4 Reducing Risks at the Intersection of AI and CBRN Threats

Section 4.4 of the executive order addresses concerns about the potential for AI to be misused to aid the development or use of CBRN threats, especially biological weapons. The order requires the secretary of homeland security to collaborate with the secretary of energy and the director of the Office of Science and Technology Policy (OSTP) to evaluate AI’s potential to enable CBRN threats within 180 days. This measure includes consulting with experts to assess AI model capabilities and risks, as well as providing the president with a report on and recommendations for regulating AI models related to CBRN risks. Additionally, within 120 days of the order, the secretary of defense is to contract the National Academies of Sciences, Engineering, and Medicine to study how AI can increase biosecurity risks and provide recommendations for mitigation of these risks, considering the security implications of using government-owned biological data for training AI models.

Synthetic nucleic acids are a type of biomolecule, like DNA or RNA, redesigned through synthetic-biology methods. There is a fear that AI models could substantially increase the ability of bad actors to create dangerous synthetic nucleic acid sequences. To address the misuse of synthetic nucleic acids, the director of OSTP will establish a framework for synthetic nucleic acid sequence providers to implement screening mechanisms, including identifying risky biological sequences and verifying screening performance. Through NIST and in coordination with OSTP, the secretary of commerce is to engage stakeholders to develop specifications and best practices for nucleic acid synthesis screening within 180 days. Agencies funding life sciences research must ensure procurement through providers adhering to this framework. The secretary of homeland security will develop a framework to evaluate these procurement screening systems, stress test them, and report annually on recommendations to strengthen them.

4.5. Reducing the Risks Posed by Synthetic Content

Section 4.5 aims to build capabilities for identifying and labeling AI-generated content. The secretary of commerce must create a report that identifies the existing standards, tools, methods, and practices for authenticating synthetic content, preventing generative AI from producing child sexual abuse material, and maintaining synthetic content. Based on this report, the director of the OMB must issue guidance to agencies for labeling and authenticating such content that they produce or publish.

4.6. Soliciting Input on Dual-Use Foundation Models With Widely Available Model Weights

Section 4.6 addresses the complex issues surrounding dual-use foundation AI models with publicly available weights. The order defines dual-use foundation models as AI models that are trained on broad data, use self-supervision, contain at least tens of billions of parameters, are applicable across a wide range of contexts, and exhibit high levels of performance at tasks that pose security risks. If a model’s weights are publicly available, open-source developers can replicate the model and build novel applications from it. The order acknowledges that while the open distribution of these models can fuel innovation and research, it also presents significant security risks, such as the potential removal of internal safeguards that could filter dangerous content. The secretary of commerce is ordered to engage in a public consultation process involving the private sector, academia, civil society, and other stakeholders within 270 days to deliberate on the potential risks and benefits of dual-use foundation AI models with publicly available weights. This consultation process involves addressing the security concerns related to the misuse of these models and exploring the advantages of AI research and safety. Following this, the secretary of commerce, in further consultation with the secretary of state and other relevant agency heads, will submit a detailed report to the president. This report will cover the findings from the consultations, including the possible implications of widely available model weights, and provide recommendations for policy and regulatory measures to effectively balance the risks and benefits.

4.7 Promoting Safe Release and Preventing the Malicious Use of Federal Data for AI Training

Section 4.7 focuses on improving public access to federal data while managing the associated security risks per the Open, Public, Electronic, and Necessary (OPEN) Government Data Act. The section acknowledges the potential security risks that could arise when different government data sets are combined, which may not be evident when data is considered in isolation.

In collaboration with the secretary of defense, secretary of commerce, secretary of energy, secretary of homeland security, and the director of national intelligence, the Chief Data Officer Council will develop guidelines within 270 days for conducting security reviews of federal data. These reviews identify potential security risks, particularly those concerning the release of data that could assist in creating CBRN weapons or autonomous offensive cyber capabilities.

Agencies must review their comprehensive data inventories to identify and prioritize potential security risks, especially concerning its use in training AI systems, within 180 days of the establishment of the order’s initial guidelines. The agencies must then take appropriate steps, consistent with applicable laws, to address these security risks. This effort aims to balance enhancing public data access with the necessary precautions to prevent malicious use, such as using government data to develop autonomous offensive cyber capabilities.

4.8 Directing the Development of a National Security Memorandum

Section 4.8 calls for a coordinated approach to managing the security risks of AI within the context of national security. The assistant to the president for national security affairs and the deputy chief of staff for policy will lead an interagency effort to draft a National Security Memorandum on AI within 270 days. This memorandum is intended to govern AI’s use in national security systems and is also for military and intelligence purposes, building on existing efforts to regulate AI development and use in these areas.

The memorandum is also intended to guide the Department of Defense, other relevant agencies, and the intelligence community in adopting AI capabilities to enhance the U.S. national security mission. It will include directions for AI assurance and risk management practices, particularly those that could impact the rights or safety of U.S. persons and, where relevant, non-U.S. persons. Additionally, the memorandum will instruct ongoing measures to counter potential threats from adversaries or foreign actors who may use AI systems against the security interests of the U.S. or its allies, ensuring actions are in line with applicable laws.

Section 5: Promoting Innovation and Competition

Section 5 of the executive order focuses on fostering the growth of the AI workforce in the United States by simplifying visa applications and immigration procedures to attract AI talent (section 5.1). It also encourages AI innovation by implementing development programs and providing guidance on intellectual property risks (5.2). To enhance AI competition, it directs agencies to address anti-competitive practices and increase support and resources for small businesses (5.3).

5.1 Attracting AI Talent to the United States

Section 5.1 focuses on enhancing the United States’ ability to attract and retain an AI workforce. The order compels the secretary of state and the secretary of homeland security to expedite visa processing and ensure ample visa appointments for AI professionals within 90 days. The departments of State and Homeland Security are also tasked with updating the Exchange Visitor Skills List—a list of specialized knowledge and skills deemed necessary for the development of an exchange visitor’s home country—and considering establishing a domestic visa renewal program within 120 days, potentially expanding this renewal program to encompass J-1 research scholars and STEM F-1 students within 180 days.

Additionally, the secretaries are instructed to initiate the modernization of immigration pathways, including revising policies to clarify routes for AI experts to work in the U.S. and potentially become lawful permanent residents and to establish a program to identify and connect with top AI talent globally. This comprehensive approach aims to streamline immigration pathways and enhance the United States’ attractiveness as a destination for leading AI experts and researchers.

5.2 Promoting Innovation

Section 5.2 directs the director of the NSF to initiate a pilot program that consolidates AI research resources and creates NSF Regional Innovation Engines, which will focus on AI-related projects within 90 and 150 days, respectively. It also demands the establishment of new National AI Research Institutes and directs the secretary of energy to launch a pilot program for AI training. The under secretary of commerce for intellectual property and the director of the United States Patent and Trademark Office (USPTO) must issue guidance on AI and inventorship. The Department of Homeland Security must design a program to counter AI-related intellectual property risks. Moreover, the secretary of health and human services (HHS) and the secretary of veterans affairs are instructed to promote AI development in health care and organize AI Tech Sprint competitions.

5.3 Promoting Competition

Section 5.3 calls for government agency heads to use their regulatory authority to promote competition in the AI sector, with a focus on preventing dominant firms from concentrating their control over key resources and preventing dominant firms from undermining smaller competitors. The section specifically instructs the Federal Trade Commission (FTC) to use its rulemaking authority under the Federal Trade Commission Act, 15 U.S.C. § 41 et seq., to ensure fair AI market competition and protect consumers and workers from AI-related harms.

It also emphasizes the strategic importance of semiconductors, directing the secretary of commerce to foster a competitive and inclusive semiconductor industry that supports all players, including startups and small firms. The Small Business Administration administrator is instructed to prioritize funding for planning a Small Business AI Innovation and Commercialization Institute, allocate up to $2 million from the Growth Accelerator Fund Competition for AI development, and revise eligibility criteria for various programs to support AI initiatives.

Section 6: Supporting Workers

Section 6 focuses on supporting workers amid the integration of AI into the labor market. It mandates the creation of a report by the chair of the Council of Economic Advisers to understand AI’s effects on the labor market. The order also requires the secretary of labor to evaluate and strengthen the government’s ability to support workers whom AI might displace.

The secretary of labor’s evaluation will consider existing federal support programs and explore new legislative measures. Furthermore, the secretary will establish principles and best practices for employers to ensure AI’s deployment benefits employee well-being, addresses job displacement risks, and adheres to labor standards. After these principles and practices are conceived and fully developed, federal agencies are encouraged to incorporate these guidelines into their programs.

Moreover, Section 6 includes a directive for guidance ensuring that workers receive appropriate compensation when AI monitors or augments their work, maintaining compliance with the Fair Labor Standards Act. What’s more, the director of the National Science Foundation is to prioritize AI-related educational and workforce development, leveraging existing programs and identifying new opportunities for resource allocation to foster a diverse, prepared, and AI-experienced workforce.

Section 7: Advancing Equity and Civil Rights

Regarding civil rights, the order requires reports exploring potential civil rights abuses by AI use (section 7.1). Agencies must analyze how AI might unlawfully discriminate in or facilitate the administration of federal programs and benefits (7.2). It also requires guidance on how AI might discriminate in hiring and housing (7.3).

7.1 Strengthening AI and Civil Rights in the Criminal Justice System

Section 7.1 directs the attorney general to spearhead efforts against AI-related discrimination and civil rights violations. This measure includes leading coordination efforts with agencies for law enforcement, assembling federal civil rights office heads to discuss combating algorithmic discrimination, and providing state and local authorities with guidance and training on investigative and prosecutorial best practices.

The attorney general is also required to deliver a comprehensive report to the president on the implications of AI in the criminal justice system, detailing both the potential for increased efficiency and the risks involved. This report is to include recommendations for best practices and limitations on AI use to ensure adherence to privacy, civil liberties, and civil rights.

To foster an informed and technologically adept law enforcement workforce, the attorney general, in cooperation with the secretary of homeland security and under the advisement of the interagency working group established by President Biden’’s executive order, will share strategies for recruiting and training law enforcement professionals with the necessary technical expertise in AI applications.

7.2 Protecting Civil Rights Related to Government Benefits and Programs

Section 7.2 emphasizes protecting civil rights in administering government benefits and programs, with a focus on the fair use of AI. According to the order, agencies must work with their civil rights and civil liberties offices to prevent discrimination and other potential harms caused by AI in federal programs and benefits. This measure includes increasing engagement with various stakeholders and ensuring that these offices are involved in the relevant agency’s decisions regarding the design, development, acquisition, and use of AI.

Additionally, the section outlines specific directives for the secretary of HHS and the secretary of agriculture, who must ensure the efficiency and justice of AI-enabled public benefits processes at the state, local, tribal, and territorial levels. The secretary of HHS must publish a plan to address the use of automated systems by states and localities in public benefits administration to ensure access, notification, evaluation, discretion, appeals, and equitable outcomes. Similarly, the secretary of agriculture must provide public benefits administrators with automated systems to ensure access, adherence to merit system requirements, notification, appeals, human support, auditing, and analysis of equitable outcomes.

7.3. Strengthening AI and Civil Rights in the Broader Economy

Section 7.3 outlines measures to strengthen AI and civil rights across various sectors. It mandates the secretary of labor to publish guidance within one year to ensure nondiscrimination by federal contractors in AI-driven hiring. It encourages financial and housing regulators to use AI to combat biases in housing and consumer financial markets, including examining underwriting and appraisal processes. The secretary of housing and urban development is to issue guidance within 180 days to address potential discrimination by automated systems in housing transactions, emphasizing the implications of using certain types of data and the adherence to federal laws in digital advertising. Lastly, it calls on the Architectural and Transportation Barriers Compliance Board to engage with the public and provide recommendations and technical assistance on using biometric data in AI, focusing on including people with disabilities.

Section 8: Protecting Consumers, Patients, Passengers, and Students

Section 8 presents a unified plan that employs a dual approach to safeguard consumers, patients, passengers, and students against potential risks associated with AI. Section 8(a) calls upon independent regulatory agencies to leverage their authority to shield American consumers from fraud, discrimination, and privacy threats and to prevent risks that AI may pose to financial stability. This measure also includes clarifying and emphasizing existing regulations and guidelines that apply to AI, including conducting due diligence measures on AI services they use and providing transparency about AI models and their applications.

Section 8(b) directs the Department of Health and Human Services to launch a detailed AI oversight and integration plan in consultation with the secretary of defense and the secretary of veterans affairs. Within the next year, the HHS AI Task Force must craft a strategic plan to responsibly deploy AI in health services, focusing on equity, oversight, and privacy. In six months, the department must develop a quality assurance strategy for AI, securing its reliability before and after hitting the market while ensuring compliance with nondiscrimination laws. To reduce clinical errors and circulate best practices, the HHS AI Task Force must develop a regulatory framework to govern AI in drug development.

Section 8(c) considers the use of AI in the transportation sector. It orders the Department of Transportation to assess the need for AI regulation, including by instructing federal advisory committees to prioritize AI safety and responsibility and to do so within three months. Additionally, the department is to delve into AI’s wider implications and opportunities in transportation, aiming to complete this exploration in six months.

Section 8(d) charges the Department of Education with creating resources, policies, and guidelines to ensure AI’s safe, responsible, and nondiscriminatory deployment in education. This measure includes considering the impact of AI on vulnerable and underserved communities. The department is also instructed to compile an “AI toolkit” for educational leaders derived from the department’s “AI and the Future of Teaching and Learning” report, concentrating on human review, trust and safety, and privacy within the educational sphere.

Section 8 of the order also encourages the Federal Communications Commission to investigate how AI can improve spectrum management and network security, specifically addressing AI’s role in reducing robocalls and robotexts. The FCC will facilitate effective spectrum sharing by working with the National Telecommunications and Information Administration. According to the order, the goal of these FCC initiatives is to bolster network robustness and tackle the complexities introduced by AI in communications.

Section 9: Protecting Privacy

Section 9 focuses on “Protecting Privacy” in the context of AI technologies. It lays out several directives to mitigate privacy risks that may arise due to AI’s ability to collect and process personal information or make inferences about individuals.

(a) Responsibilities of the Director of the Office of Management and Budget (OMB)

Identification of Commercially Available Information (CAI): The director of the OMB must identify CAI procured by federal agencies, especially that which contains personally identifiable information. This process includes CAI obtained from data brokers and through vendors. The intention is to include such data in the appropriate agency inventory and reporting processes, except when used for national security purposes.

Evaluation of Agency Standards: The director of the OMB, with the Federal Privacy Council and the Interagency Council on Statistical Policy, must evaluate standards and procedures associated with handling CAI that contains personally identifiable information. This evaluation is to inform potential guidance for agencies on how to mitigate privacy and confidentiality risks from their activities related to CAI, again, excluding national security usage.

Request for Information (RFI): Within 180 days of the executive order, the director of the OMB, in consultation with the attorney general, the assistant to the president for economic policy, and the Office of Science and Technology Policy, must issue an RFI. The purpose is to inform potential revisions to the guidance for implementing the privacy provisions of the E-Government Act of 2002, particularly in the context of AI-enhanced privacy risks.

Support and Advance RFI Outcomes: The director of the OMB must take necessary actions, following applicable law, to support and promote the near-term actions and long-term strategies identified through the RFI process. These actions may include issuing new or updated guidance or RFIs or consulting with other agencies or the Federal Privacy Council.

(b) Guidelines for Privacy-Enhancing Technologies (PETs)

NIST Guidelines: Within 365 days, the secretary of commerce, through the director of NIST, is tasked with creating guidelines to help agencies evaluate the efficacy of protections guaranteed by differential privacy, particularly in AI. These guidelines should outline key factors influencing differential privacy safeguards and common risks to their practical realization.

(c) Advancing PETs Research and Implementation

Creation of a Research Coordination Network (RCN): The director of the NSF, in collaboration with the secretary of energy, must fund the establishment of an RCN within 120 days. This network will focus on advancing privacy research and developing, deploying, and scaling PETs. It aims to facilitate information sharing, coordination, and collaboration among privacy researchers and develop standards for the community.

NSF Engagement With Agencies: Within 240 days, the NSF director must work with agencies to pinpoint existing projects and potential opportunities for incorporating PETs into their operations. The NSF prioritizes research that promotes adopting sophisticated PET solutions for agency applications, including collaboration with the RCN.

Utilizing PETs Prize Challenge Outcomes: The director of the NSF should reference the United States-United Kingdom Privacy-Enhancing Technologies (PETs) Prize Challenge outcomes for PETs research and adoption strategies. This challenge, announced at the Summit for Democracy, invites innovators across various sectors to create privacy-preserving solutions for societal challenges like financial crime detection and pandemic infection risk forecasting.

Section 10: Advancing Federal Government Use of AI

Section 10 of the executive order on AI in the federal government mandates a comprehensive and strategic approach to AI integration, talent acquisition, and governance designed to elevate the federal government’s AI capabilities while safeguarding rights and ensuring transparency.

Within 60 days, the director of the OMB will convene and chair an interagency AI Council; the director of the OSTP will serve as the vice chair. This council, exclusive of national security systems, will unify AI initiatives across federal agencies, with its membership comprising leaders from key agencies, the director of national intelligence, and other appointed chairs. Initially, agencies without a permanent chief AI officer will be represented by officials at least at the assistant secretary level, or equivalent, as determined by each agency.

Further, within five months of the order, the OMB director will be responsible for issuing detailed guidance on AI management. This task will involve agencies appointing chief AI officers to address AI coordination, innovation, and risk management. These officers will oversee internal AI governance boards and ensure adherence to minimum risk-management practices, such as public engagement, data integrity checks, impact assessments, disclosure of AI usage, ongoing AI surveillance, and human intervention in AI-driven decisions. The guidance will define high-impact AI applications and propose methods to alleviate barriers to AI adoption, including protocols for external testing of AI technologies.

Following the release of this guidance, the OMB director is required to formulate a system to track AI adoption and risk management within agencies. The secretary of commerce will create tools and guidelines to support AI risk management, ensuring agency contracts align with the stipulated AI guidance and overarching objectives. Furthermore, the OMB director must provide annual reporting instructions for AI applications within the agencies to enhance accountability and risk management. Within six months, the Office of Personnel Management (OPM) director must release guidance on deploying generative AI in federal operations.

According to Section 10, the Technology Modernization Board must prioritize AI initiatives when allocating funds. Likewise, within six months, the administrator of general services must ensure that agencies can access commercial AI capabilities. This directive does not apply to national security systems, which fall under separate instructions in a national security memorandum. The agenda of the executive order highlights a deliberate push to strengthen AI governance and capability across the federal government, focusing on interagency collaboration, innovation, risk management, and responsible deployment of AI.

Furthermore, the order emphasizes a substantial effort to develop AI expertise within federal agencies. The directors of the OSTP, the OMB, and presidential advisers must pinpoint critical AI talent requirements and streamline recruitment procedures. The AI and Technology Talent Task Force is responsible for expediting the growth of the AI workforce, monitoring recruitment milestones, and promoting collaborative practices among AI experts.

Biden also orders existing federal technology talent initiatives to intensify their recruitment efforts to support high-priority AI placements. The OPM director will execute tasks to revamp AI hiring practices, ranging from granting direct-hire authority to enhancing skills-based hiring.

Agencies are empowered to leverage special hiring authorities and budgetary provisions to swiftly acquire AI talent, reflecting these needs in their workforce plans. The Chief Data Officer Council will contribute to this objective by creating a position description for data scientists (job description 1560) and a hiring guide to support agencies in hiring data scientists.

The order also encourages all agencies to provide AI training across various roles to ensure a well-rounded understanding of AI technologies. What’s more, to address AI talent within the national defense, the order calls on the secretary of defense to submit a report outlining recommendations to fill talent gaps, including hiring strategies and retaining crucial noncitizen AI experts.

Section 11: Strengthening American Leadership Abroad

Section 11 captures the United States’ intent to assert leadership in artificial intelligence globally. This section mandates a multifaceted approach to enhance collaboration with international partners and the development of consistent AI standards worldwide, and it identifies a responsibility for the careful and safe deployment of AI technology.

Under this mandate, the secretary of state, in collaboration with national security and economic advisers and the director of the OSTP, is to lead the charge outside military and intelligence applications. This leadership role involves broadening international partnerships and increasing other countries’ awareness of U.S. AI policies, with the ultimate goal of forging enhanced collaborative ties. Moreover, the secretary of state is responsible for pioneering the creation of an international framework to better handle the risks associated with AI while also capitalizing on its benefits. This task includes advocating for international allies and partners to make voluntary commitments that align with those of U.S. companies, and working to harmonize regulatory and accountability principles that address the risk profile of AI systems internationally.

The secretary of commerce is also charged with spearheading a coordinated global effort to set responsible technical standards for the development and use of AI. Within a stipulated time frame, the secretary of commerce must establish a plan for global engagement that encompasses AI terminology, data management best practices, the trustworthiness of AI systems, and AI risk management. Following the plan’s creation, the secretary of commerce will submit to the president a report summarizing key actions. All efforts are to be guided by the NIST AI Risk Management Framework and should align with the U.S. Government National Standards Strategy.

The order also outlines a commitment to integrating AI responsibly in global development. The secretary of state and the administrator of the United States Agency for International Development (USAID) are to publish an “AI in Global Development Playbook” within a year, reflecting the integration of the AI Risk Management Framework into a diverse array of international contexts, drawing upon insights gained from the practical application of AI in development programs worldwide. Additionally, they are to develop a Global AI Research Agenda, which will aim to direct AI-related research in international settings and ensure that AI development is safe, responsible, and sensitive to labor-market dynamics across different regions.

Addressing the risks AI poses to critical infrastructure, especially across borders, is another key component of this section. In coordination with the secretary of state, the secretary of homeland security must initiate international cooperation efforts to fortify critical infrastructure against AI-related disruptions. This process includes formulating a multilateral engagement strategy that encourages the adoption of AI safety and security guidelines. A subsequent report to the president will detail priority actions for mitigating AI-associated risks to critical infrastructure in the United States.

Section 12: Implementation

Section 12 of the executive order establishes the White House Artificial Intelligence Council (White House AI Council) within the Executive Office of the President. The council will coordinate federal agency activities to ensure the effective development and implementation of AI-related policies as outlined in the order. The assistant to the president and deputy chief of staff for policy will chair the council, which will include a wide range of members from various government departments and agencies, including the secretaries of state, treasury, defense, and others, along with various assistants to the president and directors of national intelligence, the NSF, and the OMB. The chair is empowered to create subgroups within the council as needed.

Section 13: General Provisions

Section 13 clarifies that the executive order will not affect existing legal authorities of government agencies or the functions of the OMB director, that it is subject to available appropriations, and that it does not create any enforceable legal rights or benefits.